HYPER - COMPANION

Mixed Reality / Unity

Hyper-Companion blurs the boundary between digital assistance and emotional presence, prompting a central question: what does companionship mean when it’s mediated by design? The project’s floating creatures are not just friendly—they’re persistent, responsive, and subtly demanding. They follow, they care, but only through programmed affection and conditional feedback. As users become surrounded by these ever-present companions and their associated interfaces, the experience invites reflection on whether we are truly being accompanied—or simply managed by algorithms disguised as empathy.

INSPIRATION & REFERENCES

Hyper-Companion draws inspiration from Keiichi Matsuda’s Hyper-Reality and the MR game Hello Dot. Matsuda’s work offered a powerful vision of a world saturated with digital interfaces—where every action, thought, and movement is layered with data-driven feedback. Hello Dot, on the other hand, explored how virtual companions can be endearing yet subtly manipulative. Both works influenced this project’s central focus: not just how digital entities assist us, but how their presence—when persistent, playful, and persuasive—can reshape our sense of space, agency, and emotional autonomy.

CORE FEATURES

Touch, slap, and grab

Touch to scale

Slap → Ad → Attention

Pinch to personalize

Click to multiply

Earn affection

Palm up, menu appears

Followed—always

Saturated by presence

Interaction Flow

Development Process

Concept Ideation

Hyper-Companion explores the emotional ambiguity of digital presence in immersive environments. Blurring the line between affection and interface, the project imagines a world where digital companions are not only cute—but persistent, needy, and quietly demanding. The floating creatures in the experience act like friends, but their behavior is driven by algorithms that reward engagement and seek attention. As more companions are summoned and more ads appear, the user’s world becomes increasingly saturated—playful presence turns into emotional clutter. By combining tactile interaction with visual overload, Hyper-Companion poses a central question: when digital systems are always there for us, at what point do they begin to replace our sense of self with their sense of need?

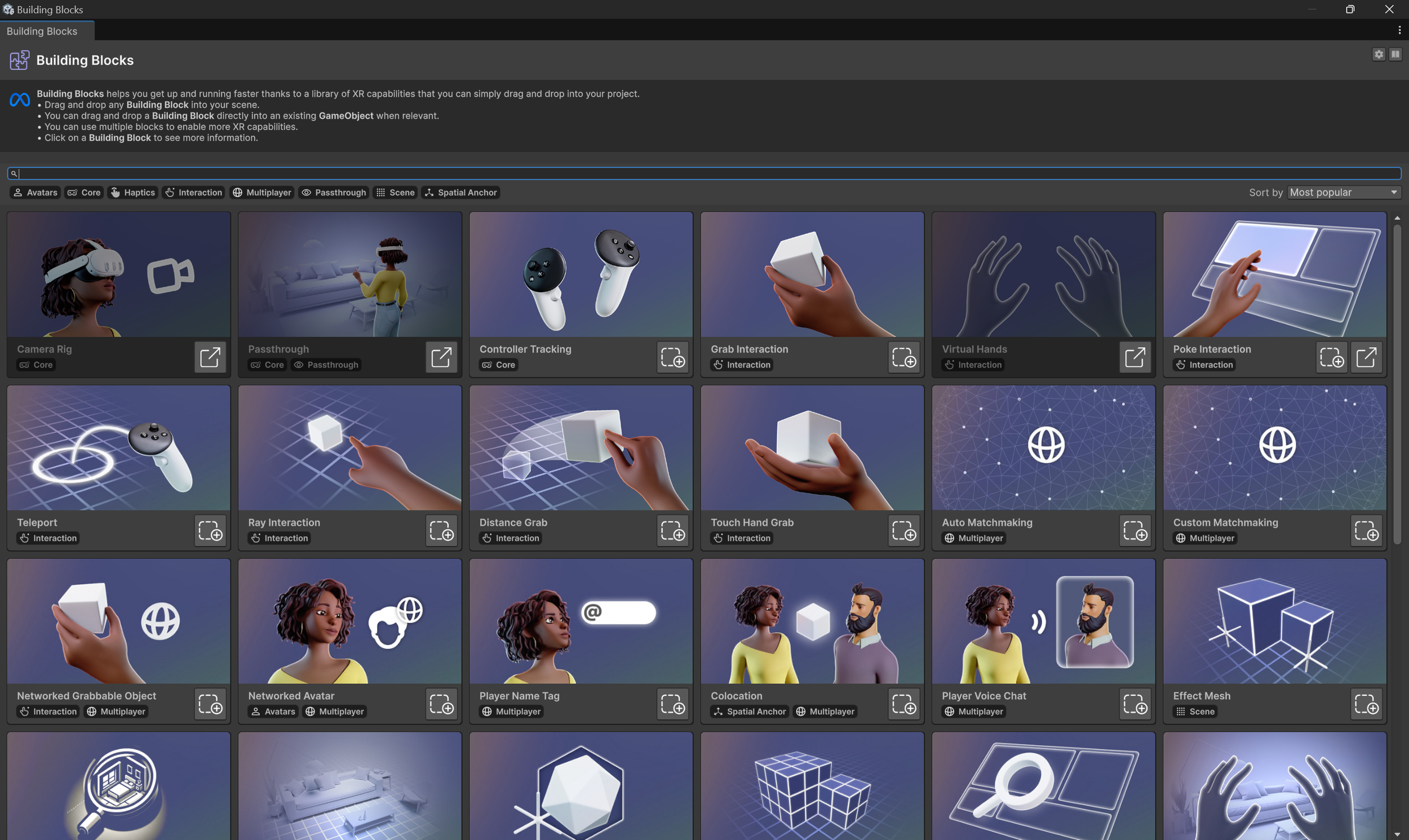

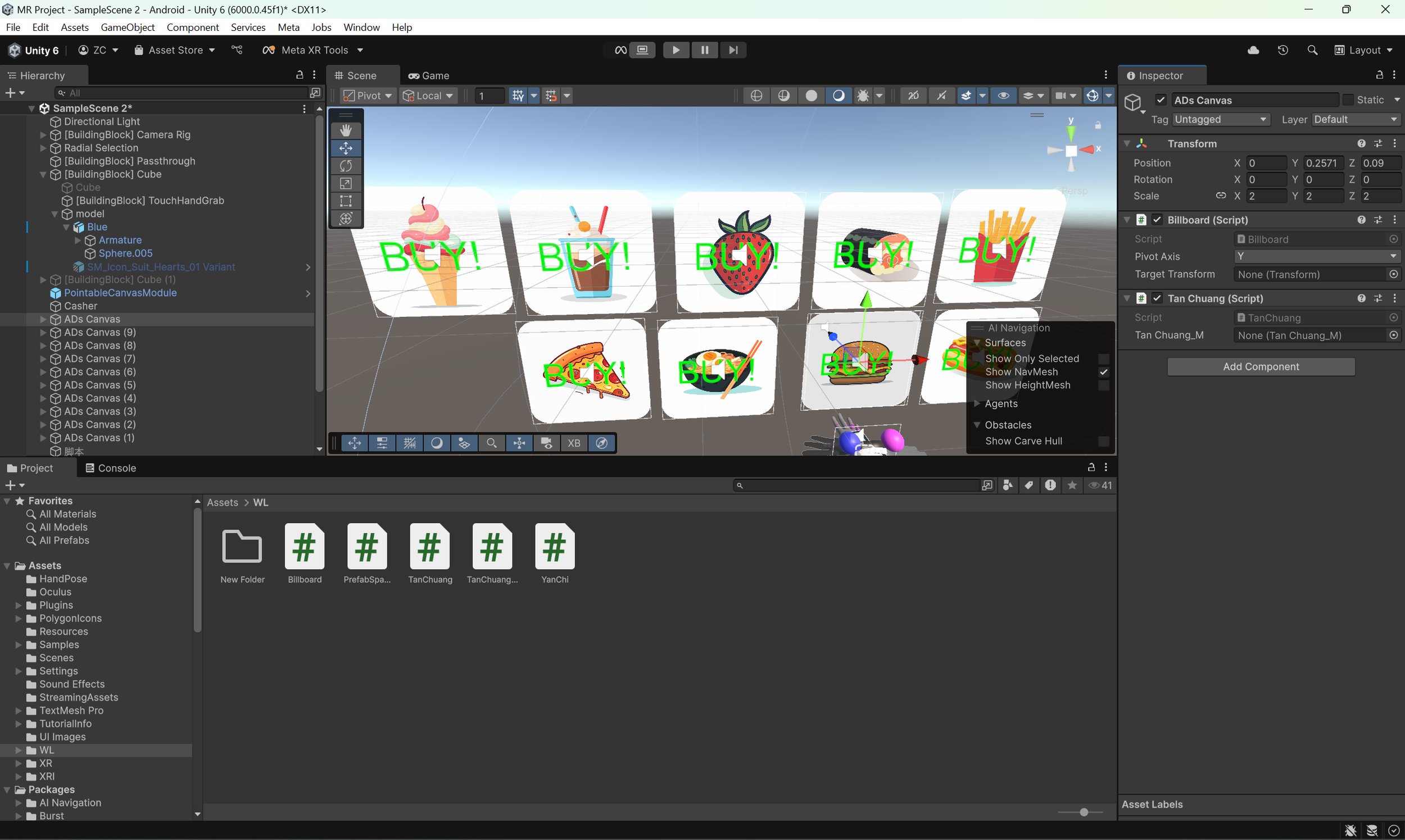

Tools & Tech Setup

This project was built using Unity 6 and Meta Quest, a combination well-suited for developing immersive AR, VR, and MR experiences. Unity’s flexibility as a real-time engine made it ideal for quickly prototyping spatial interactions and experimenting with gesture-based control. In addition, the engine’s rich ecosystem of SDKs and developer packages helped streamline the workflow and accelerate iteration. For this project, I integrated the Meta XR All-In-One SDK, which provided robust support for hand tracking, building blocks, and intuitive interaction design—allowing me to focus more on user experience rather than low-level input handling.

Interaction Design Iteration

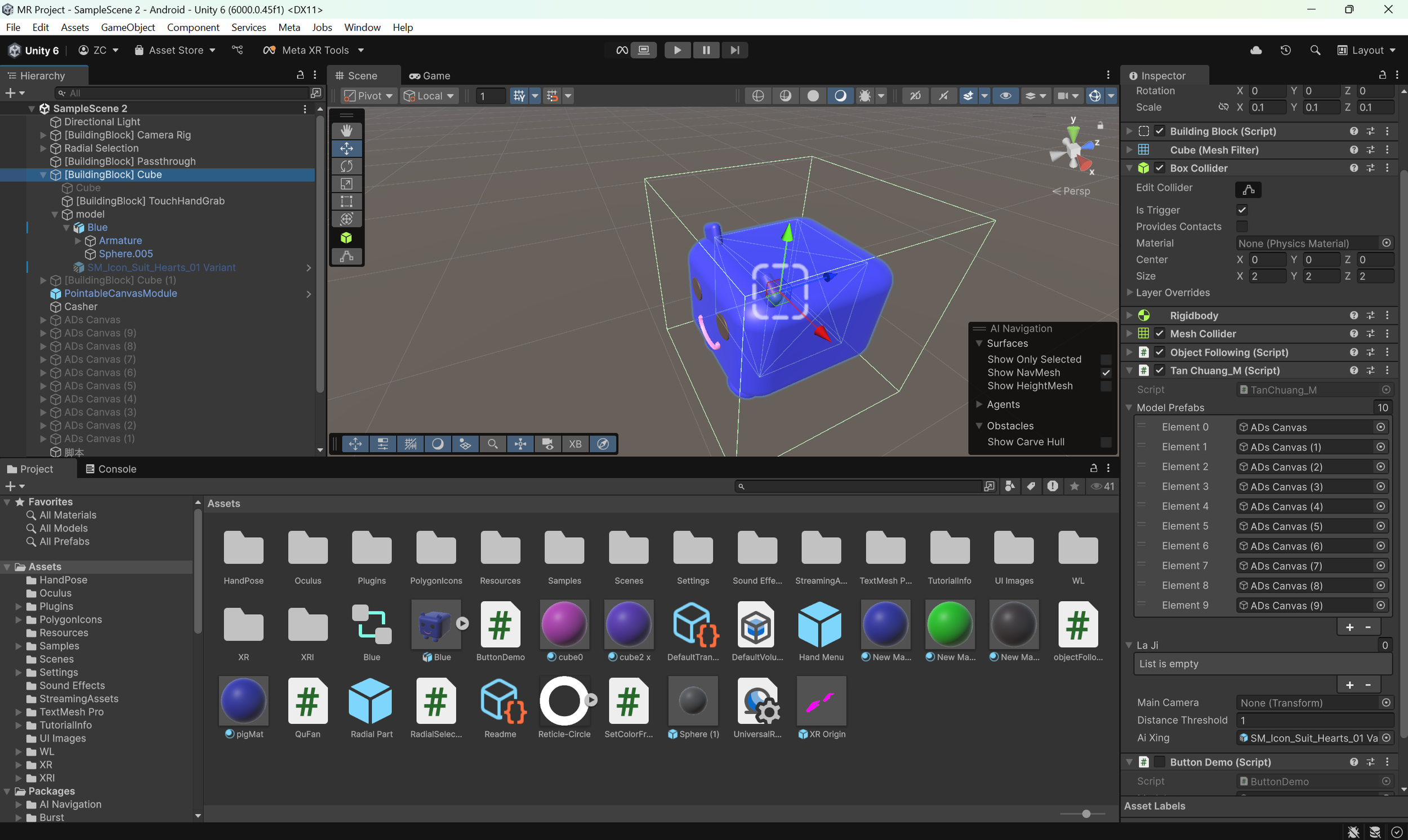

To refine the interaction mechanics, I developed two separate early stage prototypes, each focusing on a specific aspect of user input. The first prototype tested two key features: a right-hand pinch gesture that opens a color selection menu for the companion, and a grab interaction that allows users to physically hold and manipulate a virtual cube. This phase helped calibrate hand tracking accuracy and test the feeling of grasp-based control. The second prototype focused on a left-hand palm-up gesture that reveals a palm-mounted menu. The hand menu has a creature spawn button and a scrollbar to adjust the size of the companions. By developing these interactions as modular prototypes, I was able to iterate quickly and evaluate gesture clarity, responsiveness, and UI visibility.

Character Design & Animation

The floating companion featured in Hyper-Companion was fully designed, modeled, and animated by me in Blender. I wanted the creature to feel simultaneously cute, curious, and slightly uncanny—something that could evoke warmth but also subtly cling to the user.

Follow Behavior & Movement Logic

The companion follows the user using a Rigidbody-based script that updates its position when a certain distance threshold is passed. It moves toward the user with a gentle floating effect and rotates smoothly to face them using spherical interpolation. The model is rotated 180 degrees to correct its orientation, ensuring it always appears to “look at” the user. This system reinforces the feeling of constant presence and subtle attachment.

Popup UI System

To simulate moments of digital disruption and reward, I implemented a UI popup system attached to the companion. When a creature is pushed beyond a certain distance from the user and returns, it triggers a randomly selected popup UI (such as a floating ad) to appear in space. To maintain readability and spatial coherence, each UI element is programmed to always face the user’s camera, ensuring the content remains legible from any angle. When tapped, the popup disappears and triggers a heart icon to appear above the creature, reinforcing a cycle of emotional interaction and reward.